Explain

With our explain-endpoint you can get an explanation of the model's output.

In more detail, we return how much the log-probabilites of the already generated completion would change if we suppress individual parts (based on the granularity you chose) of a prompt.

Please refer to this part of our documentation if you would like to know more about our explainability method in general.

Code Example Text

from aleph_alpha_client import Client, Prompt, CompletionRequest, ExplanationRequest

import numpy as np

import os

from time import time

client = Client(token=os.getenv("AA_TOKEN"))

MODEL = "luminous-supreme"

prompt_text = """Answer the question based on the context.

Context: According to tradition, on April 21, 753 BC, Romulus and his twin brother Remus founded Rome in the place where they had been suckled as orphans by a she-wolf.

Q: In which month was Rome founded?

A:"""

params = {

"prompt": Prompt.from_text(prompt_text),

"maximum_tokens": 1,

}

request = CompletionRequest(**params)

response = client.complete(request=request, model=MODEL)

completion = response.completions[0].completion

print(f"Prompt:\n{prompt_text}")

print(f"Completion:\n{completion}") #April

exp_req = ExplanationRequest(Prompt.from_text(prompt_text), completion, prompt_granularity="sentence")

response_explain = client.explain(exp_req, model=MODEL)

for explanation in response_explain.explanations:

for item in explanation.items:

for score in item.scores:

start = score.start

end = score.start + score.length

print(f"""EXPLAINED TEXT: {prompt_text[start:end]}

Score: {np.round(score.score, decimals=3)}\n""")

# Prints:

# EXPLAINED TEXT: Answer the question based on the context.

# Score: -0.367

# EXPLAINED TEXT: Context: According to tradition, on April 21, 753 BC, Romulus and his twin brother Remus founded Rome in the place where they had been suckled as orphans by a she-wolf.

# Score: 0.856

# EXPLAINED TEXT: Q: In which month was Rome founded?

# Score: 1.214

# EXPLAINED TEXT: A:

# Score: 1.422

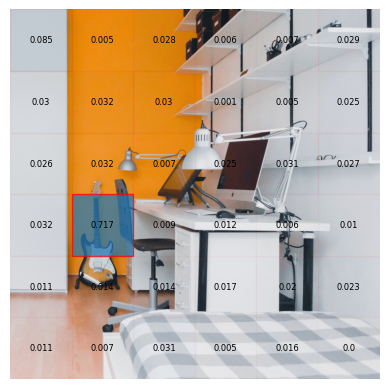

Code Example Image

First we need to define a function that let's us visualize the image with the explanations.

def explain_token(result, token_index, image, original_image_width, original_image_height):

print(f"Explanation for the token '{result.explanations[token_index].target}'")

token_explanation = exp_result.explanations[token_index]

ax = plt.gca()

# Display the image

ax.imshow(image)

for image_token in token_explanation.items[0].scores:

left = image_token.left

top = image_token.top

width = image_token.width

height = image_token.height

score = np.round(image_token.score, decimals=3)

# Create a Rectangle patch

rect = patches.Rectangle((left, top), width, height, linewidth=1, edgecolor='r', alpha=score)

ax.add_patch(rect)

rx, ry = rect.get_xy()

cx = rx + rect.get_width()/2.0

cy = ry + rect.get_height()/2.0

ax.annotate(f"{score}", (cx, cy), color='black', fontsize=6, ha='center', va='center')

plt.axis('off')

plt.show()

Now we can run the actual explanation.

import os

from aleph_alpha_client import (

Client,

Prompt,

Text,

Image,

ExplanationRequest,

CompletionRequest,

ExplanationPostprocessing

)

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import numpy as np

from PIL import Image as PILImage

client = Client(token=os.getenv("AA_TOKEN"))

image_path = "path/to/your/image"

image = Image.from_image_source(image_path)

prompt_text = "The instrument on the picture is a"

text = Text.from_text(prompt_text)

prompt = Prompt([image, text])

params = {

"prompt": prompt,

"maximum_tokens": 1,

}

request = CompletionRequest(**params)

completion_result = client.complete(request, "luminous-extended")

completion = completion_result.completions[0].completion

print(f"""MODEL COMPLETION: {completion.strip()}""")

explain_params = {

"prompt": prompt,

"target": completion,

"normalize": True,

"postprocessing": ExplanationPostprocessing("absolute")

}

request = ExplanationRequest(**explain_params)

exp_result = client.explain(request, "luminous-extended")

exp_result = exp_result.with_image_prompt_items_in_pixels(prompt)

text_explanations_first_token = exp_result.explanations[0].items[1].scores

for item in text_explanations_first_token:

start = item.start

end = item.start + item.length

print(f"""EXPLAINED TEXT: {prompt_text[start:end]}

Score: {np.round(item.score, decimals=3)}""")

# prints:

# MODEL COMPLETION: guitar

# EXPLAINED TEXT: The

# Score: 0.077

# EXPLAINED TEXT: instrument

# Score: 1.0

# EXPLAINED TEXT: on

# Score: 0.112

# EXPLAINED TEXT: the

# Score: 0.051

# EXPLAINED TEXT: picture

# Score: 0.117

# EXPLAINED TEXT: is

# Score: 0.001

# EXPLAINED TEXT: a

# Score: 0.001

original_image = PILImage.open(image_path)

original_image_width = original_image.width

original_image_height = original_image.height

explain_token(exp_result, 0, original_image, original_image_width, original_image_height)

# outputs:

# Explanation for the token ' guitar'