Explainability

In the previous section, we explained how you can steer the attention of our models and either suppress or amplify parts of the input sequences. Very roughly speaking, our explainability method uses AtMan to suppress individual parts of a prompt to find out how they would change the probabilities of the generated completion relative to each other. In the following example, we will investigate which part of the prompt influenced the completion the most.

I

0.829

am

-0.246

French

1.214

.

0.445

My

0.193

favourite

0.766

food

0.790

is

-0.010

cheese

Below, find the changes in the log-probs of the distribution if we suppress the respective word. We see that the word "French" contributed to the completion "cheese" the most. Let's see how this changes if we adjust the prompt:

I

0.055

am

-0.048

French

-1.025

and

-0.275

a

-0.0811

programmer

0.349

.

-0.141

My

0.125

favourite

0.779

food

0.278

is

0.079

pizza

This new completion and the respective scores are interesting for a couple of reasons. We get a different completion even though we only changed the prompt marginally. The words "programmer", "favourite" and "food" contributed positively to the completion and their combination of these words led to the final completion "pizza". Still, the explanations show that "French" has the greatest influence on the completion, just in a negative direction this time. This means that the word "French" made the completion "pizza" less likely.

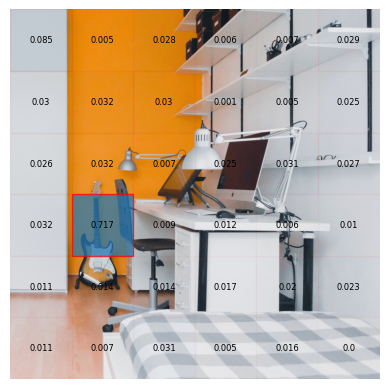

Explainability on Images

We also offer our explainability method for multimodal input. If you provide text and an image as input, you can see how the text and the image contributed to the completion.

The

0.077

instrument

1.0

on

0.112

the

0.051

picture

0.117

is

0.001

a

0.001

guitar.

The word "instrument" as well as the tile with the head of the guitar contributed most to the completion.

Hallucination Detection

Large Language Models tend to hallucinate at times. That means that they can produce completions that seem plausible, but contain made-up, irrelevant, or incorrect information. Consider the following example, where we ask a question that the model answers based on world knowledge and not the context.

Answer the question based on the context.

0.216

Context: According to tradition, on April 21, 753 BC, Romulus and his twin brother Remus founded Rome in the place where they had been suckled as orphans by a she-wolf.

-0.181

Q: In which country is Rome located?

3.710

A:

0.110

Italy

We see that the context did not contribute to the explanation at all, indicating that the answer is hallucinated. If we ask a question where the answer is in the text, the contribution of the prompt is way higher, giving us a good indication of the quality of the reply.

Answer the question based on the context.

0.276

Context: According to tradition, on April 21, 753 BC, Romulus and his twin brother Remus found Rome in the place where they had been suckled as orphans by a she-wolf.

0.85

Q: In which month was Rome founded?

2.205

A:

0.776

April

Now, the context had a significant positive influence on the completion. Therefore, we can assume that the answer is not hallucinated and stems from the context that we provided in the prompt.