How to troubleshoot PhariaFinetuning

Restoring AIM Backup from S3

The AIM deployment that comes with the finetuning app includes a service that creates a weekly backup of the AIM folder (.aim) to the configured S3 bucket. This backup occurs every Saturday at 00:00 CET.

If the Persistent Volume Claim (PVC) for the AIM database is deleted or corrupted, follow these steps to restore the last backup from the S3 bucket:

1. Locate the Relevant S3 Bucket

Check the Helm values of your Pharia AI installation:

- If

pharia-finetuning.minio.enabledis set totrue:- The backup is stored in the internal MinIO installation under the name

pharia-finetuning.minio.fullnameOverridein a bucket called "pharia-finetuning".

- The backup is stored in the internal MinIO installation under the name

- Otherwise:

- The backup is stored in the external storage bucket configured via

pharia-finetuning.pharia-finetuning.

- The backup is stored in the external storage bucket configured via

2. Locate the Backup File in the S3 Bucket

- Each backup zip file follows the naming convention:

aim-<year>-<month>-<day>-<hour>-<minute>-<second>.zip - Example:

aim-2024-12-05-13-17-04.zip

3. SSH into the AIM Deployment

Gain SSH access to the new PVC.

4. Download the Backup File

Run the following command to download the backup directly to the new PVC:

aws s3 cp s3://<YOUR_S3_BUCKET>/aim-2024-12-05-13-17-04.zip /aim-backup.zip

5. Restore the Backup

Unzip the backup file into the .aim folder at the /aim mount point:

unzip /aim-backup.zip -d /aim/.aim

6. Restart the AIM Deployment

Restart AIM to apply the changes and verify that the AIM backup has been restored by checking the AIM dashboard.

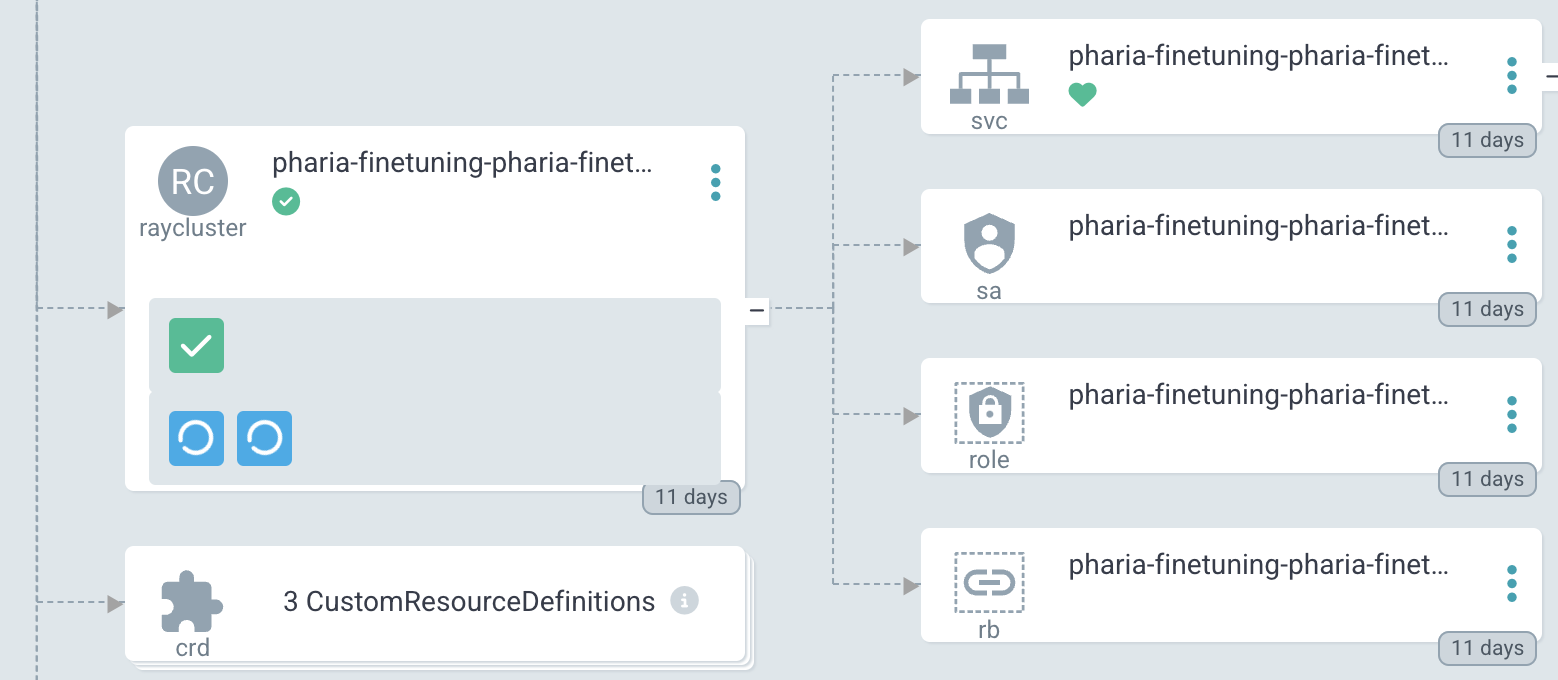

Restarting the Ray Cluster

The Ray cluster might need to be restarted to recover from faults or to apply new infrastructure changes.

⚠ Warning: Restarting will terminate all ongoing jobs, and past logs will only be accessible via the AIM dashboard.

Steps to Restart the Cluster

-

Go to the ArgoCD Dashboard

- Locate the Pharia fine-tuning application.

-

Find the Kubernetes Resource for the Ray Cluster

- Identify the head node pod, e.g.,

pharia-learning-pharia-finetuning-head

- Identify the head node pod, e.g.,

-

Delete the Head Node Pod

- Click on the three dots next to the pod name.

- Select Delete.

- The cluster will reboot in a few minutes.

Alternative: Restart Using Ray CLI

Not tested on our side, but according to the Ray CLI documentation:

-

If there are no configuration changes, use:

ray up- This stops and starts the head node first, then each worker node.

-

If configuration changes were introduced,

ray upwill update the cluster instead of restarting it. -

See the available arguments to customize cluster updates and restarts in the Ray documentation.

Handling Insufficient Resources

When submitting a new job to the Ray cluster, it will start only when resources are available. If resources are occupied, the job will wait until they free up.

Possible reasons for delays in job execution:

- Another job is still running.

- The requested machine type is not yet available.

When a Job Fails Due to Insufficient Resources

Ensure that the job’s resource requirements align with the available cluster resources:

✅ Check if GPUs are available

- If your job requires GPUs, ensure that there is a worker group configured with GPU workers.

✅ Check worker limits

- Ensure the number of workers requested does not exceed the maximum replicas allowed in the configuration.

✅ Increase GPU memory for large models

- If training a large model, increase the GPU memory limit for workers in the worker pool.

✅ Handle out-of-memory (OOM) issues

- If the head node runs out of memory, increase its memory limit to allow it to manage jobs effectively.