PhariaAI v1.250900.0 – Release Notes

| Release Version | v1.250900.0 |

| Release Date | September 10, 2025 |

| Availability | On-premise and hosted |

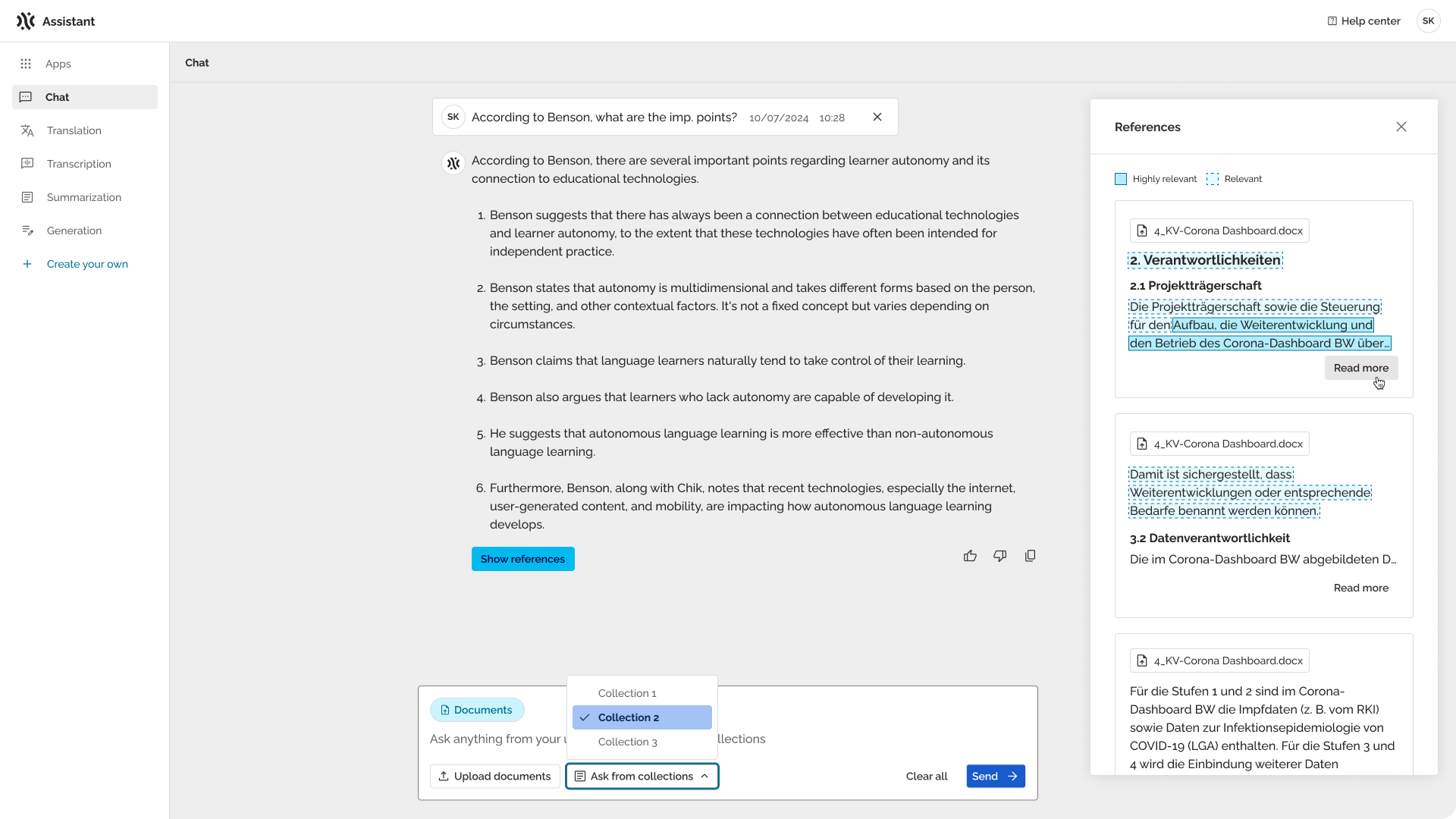

PhariaAssistant

This release introduces major enhancements to PhariaAssistant, focused on observability, faster app development, and data-driven decision-making. With new capabilities, teams can monitor performance, accelerate solution delivery, and align adoption with business goals.

OpenTelemetry Integration: Seamlessly connect to observability platforms with standard telemetry formats

| Availability | On-premise and hosted |

PhariaAssistant now supports OpenTelemetry, Grafana Faro, and Sentry instrumentation for telemetry data. Customers can configure integrations via Helm Charts by providing Faro credentials or an OpenTelemetry exporter URL. This marks the first official standardized telemetry export capability, addressing previous limitations and enabling centralized monitoring, faster troubleshooting, and vendor-agnostic compatibility.

Key Benefits:

-

Observability integration: Connect Assistant to existing monitoring tools for unified metrics collection and performance analysis;

-

Standardized logging: Leverage OpenTelemetry’s industry-standard format to streamline data integration with other applications;

-

Improved operational efficiency: Gain actionable insights into Assistant’s performance and errors, reducing resolution time.

Currently supports only platforms using OpenTelemetry exporters (e.g., Grafana Faro); non-exporter-based services like Sentry without exporter mode are excluded.

Summarization And Generation Templates: Accelerate custom app development with PhariaAI CLI templates

| Availability | On-premise and hosted |

PhariaAI CLI now offers reusable templates for Summarization and Generation applications, allowing engineering teams to rapidly deploy custom solutions without manual setup. Automated synchronization ensures templates evolve alongside core application updates, while a built-in feedback mechanism lets users contribute insights directly within their applications to drive iterative enhancements.

Key Benefits:

-

Faster development of custom apps: Jump-start use cases with pre-built templates, reducing time-to-market;

-

Built-in feedback loop: Directly share input within applications to shape future improvements;

-

Scalable extensibility: Always leverage the latest core application advancements through automatic updates.

Existing applications created with PhariaAI CLI cannot be retroactively updated to align with core application improvements, and templates for Chat and Transcribe applications are not included in this release.

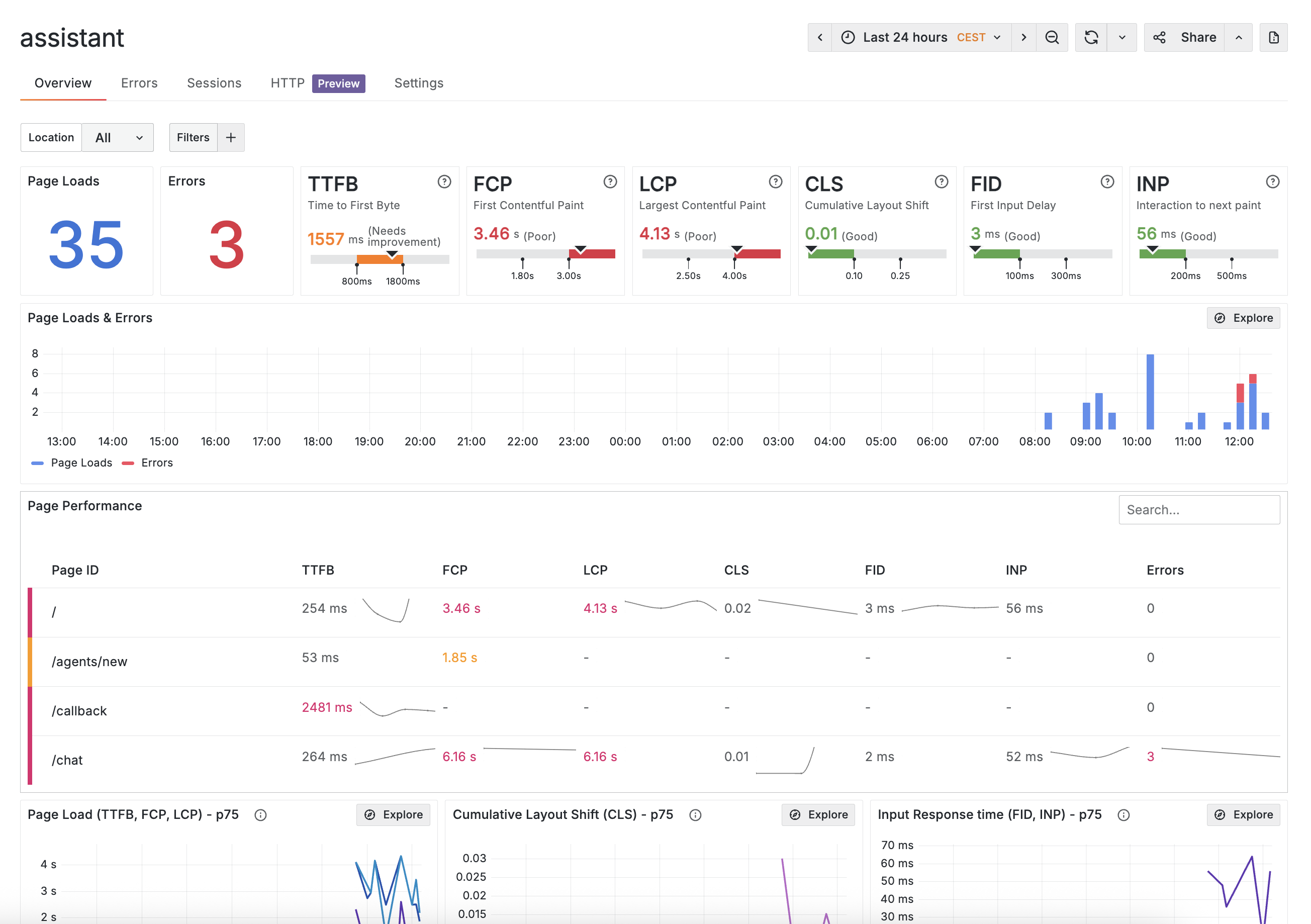

Product Analytics: Gain insights into user behavior and feature adoption for data-driven decisions

| Availability | On-premise and hosted |

Product Analytics for PhariaAssistant now provides event tracking and aggregation of user activities (e.g., active users, sessions, feature adoption) to help customers and internal teams understand usage patterns and align development with business goals. By connecting to external analytics platforms, customers can visualize metrics such as total users per instance, sessions, and feature adoption rates, enabling proactive optimization of their Assistant deployments. This marks a shift from a previously analytics-free product to a data-informed solution.

Key Benefits:

-

Actionable insights: Track sessions per user/company and adoption rates to identify trends and underused features;

-

Flexibility across environments: Supports both SaaS and on-prem setups for consistent analytics integration;

-

Data-driven decisions: Use aggregated metrics to prioritize improvements and measure ROI.

-

Alignment with business objectives: Connect Assistant usage to strategic goals for targeted development.

Initial scope includes core metrics (users, sessions, feature adoption) with limited event types; on-prem customers must self-manage GDPR compliance, and fine-grained retention policies/anonymization are pending implementation.

PhariaStudio

In this release, PhariaStudio is enhanced with OAuth Gateway, enabling secure, seamless connections between PhariaAI and third-party services like Google Drive and Microsoft SharePoint.

OAuth Gateway: Secure Connections to Google Drive and SharePoint

| Availability | On-premise and hosted |

OAuth Gateway enables PhariaAI users to securely authenticate and authorize access to third-party services like Google Drive and Microsoft SharePoint. Acting as an intermediary, the gateway enforces strong authentication and authorization with OAuth 2.0, ensuring safe, seamless integration with external storage. With this feature, users and developers can now connect PhariaAI to external data sources in just a few clicks - without fragmented or insecure custom implementations.

Key Benefits:

-

Improved Security: Centralized authentication and authorization control reduces vulnerabilities and ensures only approved users or services can access external storage;

-

Simplified Access: End-users and developers can easily connect PhariaAI to SharePoint or Google Drive, enabling workflows like conversational search or agent-powered file access;

-

Better Compliance & Visibility: Centralized auditing and logging provide IT admins with stronger compliance support and faster detection of unauthorized activity.

Developers can find implementation details and setup instructions in the respective documentation.

Current release supports OAuth 2.0 only; other OAuth versions are not yet supported.

PhariaOS

This release introduces several enhancements, empowering users and developers with greater customization, control, and integration capabilities. From branding the PhariaAssistant interface to invoking external functions, leveraging high-performance embeddings, and producing guaranteed structured outputs, these updates streamline workflows, improve automation, and provide reliable, predictable interactions.

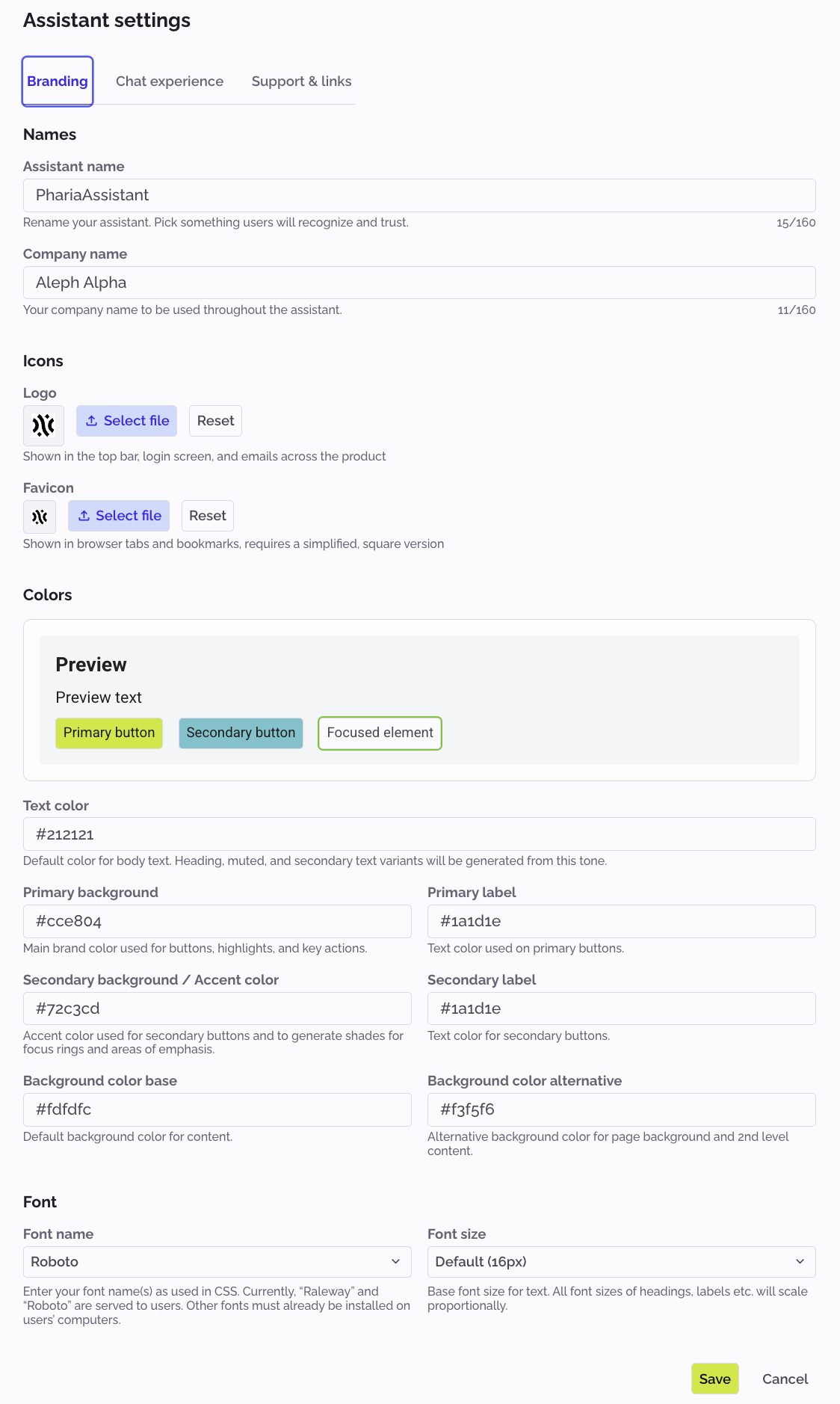

Global UI Customization: Personalize PhariaAssistant’s frontend in PhariaOS UI to match your brand

| Availability | On-premise and hosted |

The Global UI Customization feature empowers users to tailor the frontend appearance of PhariaAssistant directly within the PhariaOS UI, ensuring seamless alignment with corporate branding. Admins can now modify color schemes, logos, and interface elements (e.g., links, organizational components) without requiring backend interventions or engineering support. This marks a shift from code-dependent configurations to dynamic, user-friendly adjustments that propagate instantly across the platform.

Key Benefits:

-

Ease of Configuration: Admins can adjust frontend branding and UI elements directly in PhariaOS, eliminating reliance on engineering teams;

-

Brand Consistency: Ensure PhariaAssistant reflects organizational identity through customizable logos, colors, and links;

-

Reduced Engineering Dependency: Streamline IT workflows by enabling non-developers to manage frontend customizations.

Customization is restricted to predefined UI elements (colors, logos, links), may not dynamically apply to all integrated modules, and advanced frontend changes still require developer involvement.

Tool Calling Support In Inference API: Let LLMs call predefined functions for reliable code and API integration

The Inference API now supports structured function calls (tool calling), empowering developers to automate workflows, execute API requests, and invoke business logic directly from LLM interactions. By returning function names and parameters in JSON schema format, the model ensures predictable and secure integrations. This marks a shift from free-form text outputs to enforceable, structured actions, streamlining complex automation scenarios and reducing manual intervention.

Key Benefits:

-

Expanded Flexibility and Control: Developers can define tools for the LLM to use, ensuring dedicated and enforceable outputs;

-

Improved Automation: Seamlessly execute workflows, API calls, or system actions from LLM responses without additional parsing;

-

Predictable Integrations: Function calls are now first-class API elements, simplifying development and enhancing security.

Embedding Model Support Via vLLM: Enable high-throughput, OpenAI-compatible access to modern open-weight models

This enhancement introduces vLLM-powered embedding support to the Inference API, enabling customers to leverage modern open-source embedding models via the /v1/embeddings endpoint. Unlike the previous luminous-inference stack, vLLM offers higher throughput and quality, while the OpenAI-compatible interface simplifies migration and reuse of existing tooling. Customers can now host and use a broader range of open-weight models, future-proofing their workflows and improving downstream applications like RAG (Retrieval-Augmented Generation) with more accurate document retrieval.

Key Benefits:

-

Access to SOTA Models: Use cutting-edge open-source embeddings beyond luminous-base and Pharia-1-Embedding;

-

Improved Performance & Scalability: Higher throughput and efficiency via the vLLM backend;

-

Seamless Integration: OpenAI-compatible endpoint allows reuse of existing SDKs, connectors, and workflows;

-

Better RAG Outcomes: Enhanced embedding quality improves document retrieval accuracy and application performance.

Test the feature using the Inference API docs and OpenAI API documentation.

Initial rollout is limited to the Inference API (SDKs/Assistant integrations may require updates), hosted models are restricted to a subset of open-weight options, operational benchmarks are still maturing, and migration to the new endpoint may necessitate workflow adjustments.

Structured Output with JSON Schema: Guaranteed, parseable responses in the Inference API

Structured Output with JSON Schema enables PhariaAI users to constrain model responses to a predefined JSON schema via the /chat/completions endpoint. This ensures predictable, parseable outputs for applications that invoke external APIs, machine instructions, or require structured data. By selecting only tokens that match the schema during the sampling phase, models deliver compliant JSON every time. Users can invoke structured output directly via the HTTP API or through the Aleph Alpha client using Pydantic objects, JSON Schema, or via the OpenAI client interface.

Key Benefits:

-

Guaranteed Schema Compliance: Models produce outputs that strictly adhere to the defined JSON structure, reducing errors in downstream workflows;

-

Flexible Integration Options: Support for Aleph Alpha client (Pydantic/JSON Schema), OpenAI client, and direct API calls makes adoption seamless for developers;

-

Reliable Automation: Structured output supports robust applications that rely on predictable machine-readable responses, improving API calls, automation, and integrations.

Developers can refer to the detailed documentation for usage examples, including Python client integration with Pydantic models, JSON Schema definitions, OpenAI client parsing, or raw HTTP API requests.

Structured output is currently enabled only for vLLM workers. Also, if maximum tokens are reached before completion of the JSON schema, then it might not be valid JSON despite activating structured output.

Other updates

Migration of API credentials

With v1.250900.0, PhariaAI introduces a new format for API credentials and now stores them in Postgres instead of Zitadel.

-

Existing API credentials continue to work and are migrated automatically on first deployment of v1.250900.0;

-

Important for IT Admins: Service users and tokens created directly in the Zitadel console will no longer function. API credentials should now only be created via PhariaOS;

-

If service user tokens were created in Zitadel, they will still be migrated - but only once you restart the pharia-iam service.

Stability improvements

-

Unified multiple services (migration-job, sparse-embedding-service) on the pharia-common library to enable extended Helm configuration and reduce fragmentation;

-

Disabled IPv6 listen at NGINX to improve network stability and prevent configuration issues.